Spectral Representations for Convolutional Neural Networks

Implementation of Spectral Representations for Convolutional Neural Networks

The project is based on the the 2015 Paper by Oren Rippel et.al. Arxiv Link

The proposals in the paper are aimed at improving the performance of Convolutional Neural Networks by exploiting the spectral domain of images (Fast Fourier Transforms are used to quickly convert images from spatial to spectral domain).

Essentially the proposals can be summarized into two main hypothesis :

-

Spectral Poolingwhere the pooling operation happens in the spectral domain of the input images. -

Spectral Dropoutwhere frequencies are stochastically choosen to be dropped to mimic the regularization effect of standard dropout. -

Spectral Parameterizationwhere the image filters are initialized in the spectral domain.

We were able to successful implement all the Novel Ideas presented in the paper and replicate the results seen by the Authors.

Spectral Pooling

Spectral Pooling is a dimension reduction technique the authors introduced wherein the spectral representation of the image is truncated using a low pass filter and the image is later rebuilt with inverse transformations.

This technique is supported by the fact that higher frequencies in the spectral representations of the images tend to encode noise and edges. Removing these frequencies leads to both dimension reduction while minimizing the loss of information.

The implementation involves :

- DFT of the image and shifting of the zero-frequency component.

- Implement a low pass filter and process the DFT image with the filter.

- DFT images after low pass need to adhere to standards which involves treating corner cases when the shapes are odds.

- Take Inverse DFT to get the spatial representation of the new image.

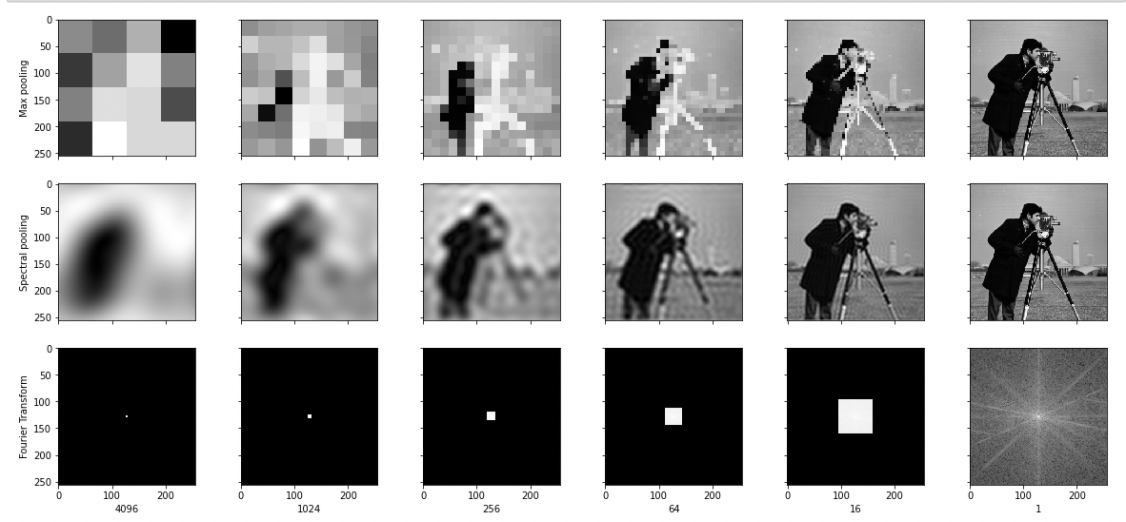

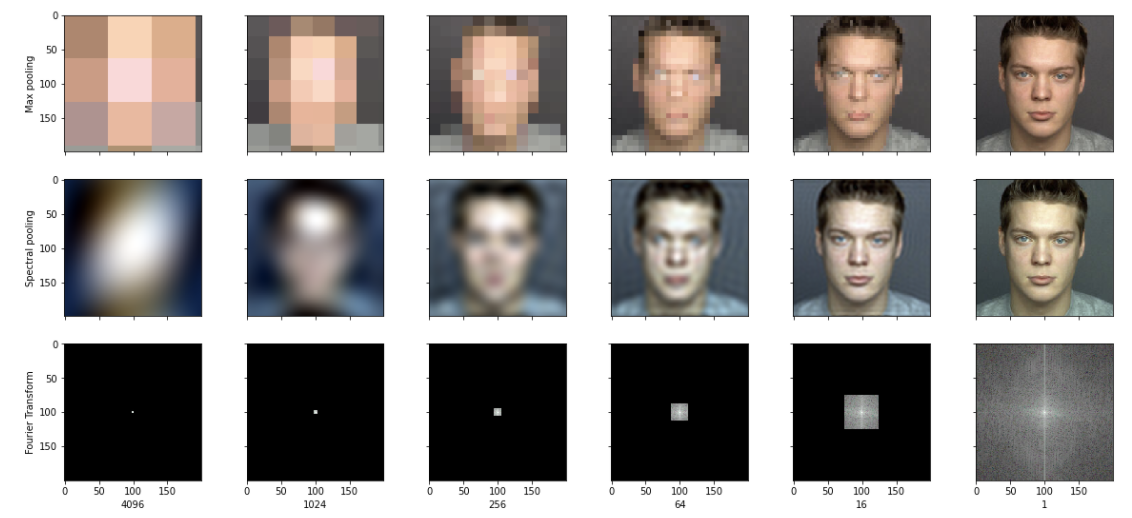

The first row is the images after max pooling with the dimension reduction ratio described below. The second row is the image after spectral pooling with the same dimension reduction ratio applied to the spectral representation. The third row is a visualization of the frequencies that are kept after the low pass filter for the given dimension reduction ratio.

We can clearly see that the spectral pooling is much better at keeping the overall structure of the image intact through the extreme amounts of pooling.

We can see that in the most extreme case of dimension reduction (by a factor of 4096), the max pooling output has lost all resemblances to the original image but in the spectral pooling with just 8 frequencies , we can still see the silhouette of the man taking the photo.

Spectral Representation

In traditional CNN all the learnable parameters (weights of the filter as well as the associated bias terms) are defined in the real (spatial) domain.

In this implementation however, the aim was to design a CNN with spectrally parameterized filters.

We did this by initializing our parameter weights as complex valued coefficients of the DFT of the filter weights rather than the filter weights themselves.

To do this we first created a custom spectralConv2D layer in Keras.

Within this layer we built two separate kernels for representing the real and imaginary parts of our complex-valued kernel.

This was done since Keras doesn’t provide support for learning complex parameters directly. Next, we combine these two kernels into a single complex filter using tf.complex.

We then take the inverse DFT of this filter so as to get its spatial representation which is ultimately used in the convolution operation with the input tensor (which was already in the spatial domain).

Results

We built different CNN architectures and their spectral equivalent wherein the Convolutional Layers are replaced with spectral CNNs , max-pooling layers are replaced with spectral pooling and dropout layers with spectral dropout layers.

The trainings show that the spectral CNN Architectures were always faster in attaining the validation accuracy achieved by the standard CNN Architecture.

The Spectral CNN Architectures showed training speed-up by almost 2.1 to 5 times.

Furthermore the Spectral CNNs were able to achieve higher validation accuracy with respect to the standard CNN architecture.